Deepfakes use artificial intelligence to create fake videos, images, or audio that look and sound real. What started as a niche technology in 2017 has evolved into a significant threat to criminal justice systems worldwide.

A systematic review by researchers at University College London has revealed how these AI-generated fakes are undermining court evidence, enabling new types of crime, and eroding trust in legal institutions. The technology’s accessibility and rapid advancement create unprecedented challenges for judges, lawyers, and law enforcement.

The scale of the threat is substantial. In 2019, Sandoval et al identified over 14,600 deepfake videos online. By 2024, the technology has become so sophisticated that even experts struggle to distinguish real from fake content when used as evidence in criminal cases.

This surge in deepfake technology doesn’t just impact individual court cases, it threatens the fundamental ability of criminal justice systems to establish truth and deliver justice.

High-Profile Deepfakes: From War Propaganda to Financial Heists

A deepfake video of President Zelensky spread panic through Ukraine during the Russian invasion. The manipulated footage showed Ukraine’s leader appearing to tell his soldiers to lay down their arms and surrender to Russian forces. As the video circulated to over 120,000 viewers, Ukrainian officials rushed to debunk the dangerous propaganda. The incident revealed how deepfakes could be weaponized during military conflicts to create chaos and undermine leadership.

Corporate employees in Hong Kong thought they were in a routine video conference with their chief financial officer. Instead, they were watching a sophisticated AI impersonation that fooled multiple staff members. The scammers used deepfake technology to perfectly mimic the CFO’s face and voice, convincing the team to transfer $25.6 million to fraudulent accounts. This 2024 heist demonstrated how deepfakes enable elaborate financial crimes that bypass traditional security measures.

In Thailand, victims received video calls from what appeared to be police officers conducting official business. The “officers” were actually criminal deepfakes, using AI-generated faces and voices to add authority to their extortion schemes. The scam worked by exploiting people’s instinct to comply with law enforcement, revealing how deepfakes can hijack institutional trust for criminal gain.

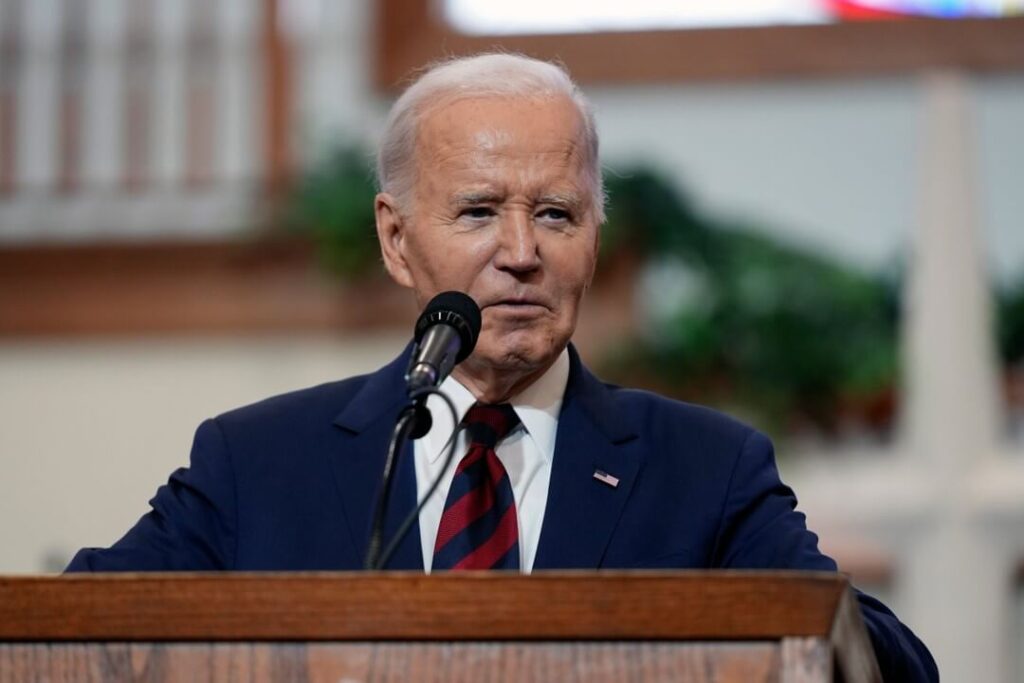

New Hampshire voters received an unexpected call in January 2024 – President Biden’s voice telling Democrats to skip voting in the primary. But Biden never made the call. Scammers had used AI to clone his voice, creating a deepfake robocall designed to suppress voter turnout. The incident sparked investigations and raised alarms about deepfakes targeting electoral processes.

These cases reveal deepfakes’ power to target different aspects of society, from national security to financial systems, law enforcement to democratic processes. For courts handling criminal cases, each incident adds new complexity to the challenge of determining what digital evidence they can trust.

When Seeing Isn’t Believing: Three Critical Challenges Facing Courts

The rise of deepfake technology has created unprecedented challenges for courts worldwide, fundamentally changing how the legal system handles digital evidence. Research from the University of London highlights three major threats that are reshaping legal proceedings:

- The Crumbling Trust in Video Evidence Courts have long relied on video evidence as a trustworthy witness – a principle known as the “silent witness theory.” However, this foundation is now shaking. In 2023, a UK court case highlighted this new reality when jurors’ awareness of deepfake technology cast doubt on authentic video evidence, even after its authenticity was proven.

- The Attribution Puzzle Prosecutors now face a complex web of challenges when building cases involving digital evidence. They must prove who created the content, track how it was distributed, and establish jurisdiction when content crosses international borders. Traditional methods of building a case have been turned upside down by the ability to create perfect digital forgeries.

- The Evidence Manipulation Crisis The threat goes beyond fake videos. Today’s courts face manipulated security footage, fabricated audio recordings, and altered documents. Without standardized procedures to verify digital evidence against AI manipulation, judges and juries are left in uncharted territory. Even more concerning, the mere possibility of deepfakes casts a shadow of doubt over all digital evidence, whether manipulated or not.

As one judge noted: “We’re trying to apply 20th-century legal principles to 21st-century technology.” The challenge isn’t just about spotting fakes, it’s about maintaining the integrity of legal proceedings in an age where perfect digital forgeries are possible.

The Dark Side of Deepfake Technology: A Criminal Revolution

The rise of deepfake technology has ushered in disturbing new frontiers of criminal activity, with non-consensual content emerging as its most pervasive manifestation.

An alarming study from 2019 revealed a troubling reality. Of the 14,600 deepfake videos analyzed, 96% were pornographic in nature, predominantly targeting women by digitally inserting their faces into explicit content without consent. The automated nature of this technology has enabled mass production of these harmful materials, creating a crisis that shows no signs of slowing.

The victims of these crimes often suffer in silence. Many face a perfect storm of obstacles: inadequate legal protections, paralyzing fear of further exposure, the near-impossibility of identifying their perpetrators, and the frustrating reality that once content spreads online, removal options become severely limited.

Financial criminals have also embraced deepfake technology with frightening sophistication. A particularly striking case involved a British energy company where fraudsters deployed audio deepfakes to mimic a CEO’s voice. By exploiting the natural human instinct to obey authority figures, they successfully convinced an employee to transfer €220,000 to a fraudulent account. This represents just one facet of a broader trend, as criminals deploy deepfakes across a spectrum of financial schemes. From creating convincing video calls for romance scams to cloning voices for business email compromise, from generating fake photos for identity theft to creating fraudulent executive announcements to manipulate markets – the criminal applications seem boundless.

The threat to information integrity poses perhaps the most far-reaching danger. Election cycles have become particularly vulnerable as deepfake videos showing candidates making inflammatory statements or endorsing opposing policies spread like wildfire through social media, outpacing fact-checkers’ ability to respond.

Military operations have entered a dangerous new era where deepfakes pose a strategic threat. Adversaries can now fabricate authentic-looking battlefield footage, manipulate intelligence about troop positions, and even issue counterfeit orders that appear to come from commanding officers. The technology’s ability to generate convincing propaganda adds another layer of warfare, where information becomes a powerful weapon that can sow confusion and undermine military operations within minutes.

What makes this threat particularly pernicious is the scale at which it operates. Thanks to automation, a single criminal can now produce hundreds of convincing fakes, creating an overwhelming challenge for law enforcement. Each incident demands significant time and technical expertise to investigate, yet new fakes can be generated in minutes. This asymmetry between creation and investigation has created a resource crisis that threatens to overwhelm traditional law enforcement approaches.

Law Enforcement Challenges: Fighting Crime in the Age of AI

Digital evidence verification has become a frightening landscape for modern investigators, who must now approach every piece of digital evidence with skepticism. This new reality was sharply illustrated in 2023 when the London Metropolitan Police spent weeks verifying the authenticity of surveillance footage in a fraud case before it could be presented in court.

The challenges facing law enforcement are multifaceted. Traditional forensic methods struggle to detect sophisticated AI manipulation, while victims face the nearly impossible task of proving content is fake. Digital trails often lead to anonymous creators, and evidence quality deteriorates as files spread across networks. Adding to this complexity, cases frequently span multiple jurisdictions, requiring intricate international cooperation.

The global nature of deepfake crime presents unique hurdles for investigation and prosecution. A single case might involve perpetrators, servers, victims, and payment systems scattered across different countries. While catching deepfake criminals requires international teamwork, differences in laws and technical resources between countries continue to hamper investigations.

Law enforcement has developed a two-pronged approach to detecting deepfakes. Technical analysis employs digital forensics, AI detection algorithms, audio analysis, and blockchain tracking, while human intelligence relies on traditional methods like witness interviews, background checks, and behavioral analysis. Yet despite these efforts, detection technology consistently lags behind criminal innovation. As one investigator grimly noted: “By the time we develop detection methods, criminals have already found new ways to make their fakes more convincing.”

Legal Responses Worldwide: Racing to Regulate Deepfakes

In April 2024, the UK took decisive action by criminalizing sexually explicit deepfakes through the Online Safety Act, introducing unlimited fines and potential jail time for offenders. However, significant gaps remain in addressing other forms of deepfake crime, often leaving prosecutors struggling to find applicable charges.

The United States has adopted a fragmented approach, with regulations varying by state. While California restricts political deepfakes and Texas prohibits harmful uses, no comprehensive federal legislation exists. Two promising bills – the NO FAKES Act and DEFIANCE Act – aim to address voice impersonation and non-consensual deepfakes, though neither has yet passed.

The European Union leads the global response with its comprehensive AI Act, which includes:

- Mandatory disclosure of AI-generated content

- Strict controls on deepfake creation

- Enhanced content traceability requirements

- Substantial penalties for violations

However, significant challenges persist in enforcement. Law enforcement agencies struggle with proving criminal intent, establishing jurisdiction, and keeping pace with rapidly evolving technology. As one judge noted, “Our legal framework wasn’t built for a world where seeing isn’t believing.”

Protecting Justice: Solutions for a Synthetic Future

To address these challenges, courts are developing new standards for digital evidence handling and authentication. A promising “tiered evidence” system would assign different weights to digital evidence based on verification levels and corroborating proof. Law enforcement agencies are also upgrading their capabilities through enhanced digital forensics training and AI detection tools.

Research into detection technologies continues to advance, with China leading the effort, 51 Chinese universities work on deepfake detection compared to 38 in all other countries combined. However, new threats continue to emerge, from real-time deepfake video calls to synthetic witness testimony, requiring legal systems to adapt rapidly.

Legal frameworks designed for physical evidence and human testimony must evolve to meet these new challenges. The future of justice depends on our ability to verify truth in an increasingly synthetic world.

Recommendations

The researchers propose essential changes:

- Updated evidence laws

- International cooperation frameworks

- Public education programs

- Mandatory content authentication

- Increased funding for detection research

Without these changes, deepfakes threaten to undermine public trust in legal institutions and criminal justice processes. As one researcher concluded: “The integrity of our justice system depends on our ability to establish truth. Deepfakes challenge that fundamental principle.”

Sources ->

- Sandoval, M. P., de Almeida Vau, M., Solaas, J. et al. Threat of deepfakes to the criminal justice system: a systematic review. Crime Sci 13, 41 (2024). https://doi.org/10.1186/s40163-024-00239-1

- Folk, Z. (2024, February 23). Magician created AI-generated Biden robocall in New Hampshire for Democratic consultant, report says. Forbes.

- Milmo, D. (2024, February 5). Company working in Hong Kong pays out £20m in deepfake video call scam. The Guardian.

- Staff Writers. (2022, April 29). Don’t fall for ‘Deepfake’ video calls from scammers: police. The Nation.

- Wakefield, J. (2022, March 18). Deepfake presidents used in Russia-Ukraine war. BBC News.

Article Citation:

Guy, F. (2025, February 17). The Deepfake Crisis: How AI is Reshaping Criminal Justice. Crime Traveller. Retrieved from https://www.crimetraveller.org/2025/02/the-deepfake-crisis-ai-criminal-justice/